With the rapid advancement of Artificial Intelligence (AI), Machine Learning (ML), and High-Performance Computing (HPC), the demand for ultra-high-speed, low-latency networking has never been greater. AI-driven workloads generate enormous volumes of data that require high-bandwidth, scalable, and efficient network solutions.

The QSFP-400G-DR4 transceiver plays a crucial role in AI data centers, GPU clusters, and large-scale computing environments, enabling seamless high-speed data exchange while minimizing latency.

1. Overview of QSFP-400G-DR4 Transceiver

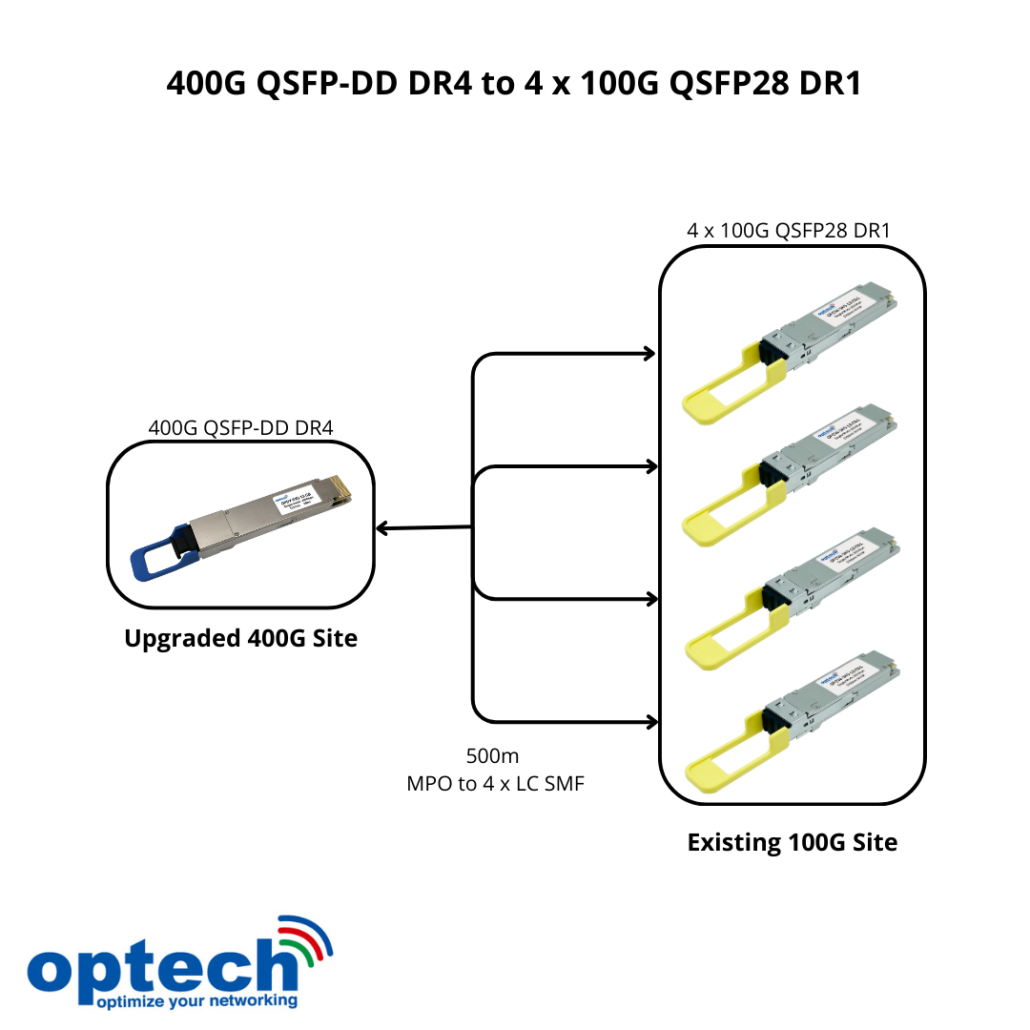

The QSFP-400G-DR4 is a 400Gbps optical transceiver designed for high-density and high-performance data center networking. It utilizes Parallel Single-Mode Fiber (PSM4) transmission, supporting four lanes of 100G connectivity over an MPO-12 connector.

Key Features of QSFP-400G-DR4:

✔ Total 400Gbps throughput (4x100G lanes)

✔ Up to 500 meters over Single-Mode Fiber (SMF)

✔ Low power consumption (~10W), optimized for AI workloads

✔ Compliant with IEEE 802.3bs 400GBASE-DR4 standard

✔ Supports QSFP-DD (Double Density) interface for high-density networking

2. AI Workloads & Networking Challenges

AI-Driven Workloads Generate High Network Traffic

AI training and inferencing involve massive parallel computations across GPUs, CPUs, and TPUs. This results in:

- Large-scale data movement between compute nodes

- High-throughput storage access (NVMe-oF, SSD clusters)

- Real-time AI inferencing and deep learning training

Why AI Needs 400G Connectivity?

Traditional 100G or 200G networking struggles to keep up with modern AI cluster demands. The shift to 400G transceivers like QSFP-400G-DR4 provides:

✔ Lower latency for AI-driven real-time applications

✔ Scalable, high-bandwidth connections for distributed ML training

✔ Energy-efficient, cost-effective interconnects for large-scale AI clusters

3. 400G QSFP-DR4 in AI Cluster Interconnects

AI Cluster Architecture: Spine-Leaf Network Design

Modern AI and HPC data centers rely on spine-leaf topologies to handle high-bandwidth east-west traffic.

Spine Layer: High-Speed Aggregation

- Uses 400G transceivers to interconnect multiple leaf switches

- Provides ultra-fast communication between AI training nodes

- Minimizes congestion for scalable AI deep learning models

Leaf Layer: AI Compute & Storage Connections

- Connects GPU servers, storage clusters, and compute nodes

- Supports low-latency AI inferencing with high-speed data movement

- Optimized for NVIDIA, AMD, and Google AI workloads

AI Compute Layer: GPU-to-GPU Communication

- Direct 400G links between AI accelerators, GPUs, and TPUs

- Enables high-speed data exchange for large-scale AI models

- Reduces training time and increases processing efficiency

4. Why 400G QSFP-DR4 is Ideal for AI Data Centers?

| Feature | AI Data Center Benefits |

| 400Gbps Bandwidth | Handles large AI training datasets efficiently |

| Low Latency & High Throughput | Reduces communication bottlenecks for deep learning |

| Energy Efficient (~10W Power) | Minimizes power consumption in AI clusters |

| Long Reach (500m over SMF) | Supports large-scale AI and cloud data center networks |

| QSFP-DD Form Factor | Enables high-density deployments in modern AI fabrics |

AI workloads require fast, reliable, and scalable interconnects. The QSFP-400G-DR4 meets these needs by enabling real-time data processing and AI acceleration.

5. Deployment Scenarios for 400G QSFP-DR4 in AI

AI Model Training & Deep Learning

- Powers AI supercomputers like NVIDIA DGX, H100, A100 clusters

- Enables large-scale distributed ML model training

- Handles terabytes of training data efficiently

Hyperscale AI Cloud Infrastructure

- Used in AI-driven platforms like Google Cloud AI, AWS AI, Microsoft Azure AI

- Supports real-time AI analytics, automation, and self-learning models

Autonomous Driving & AI Robotics

- Connects self-driving AI edge servers to cloud AI processing

- Facilitates real-time sensor fusion, deep learning, and AI inferencing

Conclusion: 400G QSFP DR4 Unlocks AI Potential

The 400G QSFP-400G-DR4 transceiver is a key enabler of next-gen AI computing, delivering high-speed, low-latency networking for AI clusters, cloud AI, and deep learning infrastructures.

As AI continues to evolve, high-bandwidth, scalable, and efficient networking solutions like QSFP-400G-DR4 will drive faster AI model training, real-time inferencing, and seamless cloud AI integration.

🚀 Upgrade your AI infrastructure with 400G solutions today! 🔥

About Optech Technology Co. Ltd

Optech Technology Co. Ltd was founded in 2001 in Taipei, Taiwan. The company was created with a sole purpose, to provide a wide and high quality portfolio of optical products to a very demanding and fast evolving market.

To respond to the permanent increase of IP traffic, Optech portfolio is constantly growing. Since the beginning, the company has always been up to date with the latest innovations on the market. Today, we are proud to deliver a large selection of 25G SFP28, 40G QSFP+, 100G QSFP28, 200G QSFP56, 400G QSFP-DD, 800G QSFP-DD and OSFP optical transceivers and cables.

Optech has a large portfolio of products which include optical transceivers, direct attach cables, active optical cables, loopback transceivers, media converters and fiber patch cords.

Through its large selection of optical products, that have a range of data speed from 155 Mbps to 800 Gbps and reach distances up to 120km, Optech products are suitable for various industries such as telecom, data centers as well as public and private networks.

Juniper Compatibility Matrix for Optech Technology Part Number: here

| Part Number | Optech Part Number | Features | Note |

| QSFP-400G-DR4 | OPDY-SX5-13-CB | 400Gb/s QSFP-DD DR4 single mode mode, MPO, 1310nm, up to 500m | 400G QSFP-DD DR4 to 4 x 100G QSFP28 DR1: An Easy Upgrade to a 400G Network |

For additional information about the QSFP-400G-DR4, or price inquiry, please contact us at sales@optech.com.tw