As AI-driven data centers and high-performance computing (HPC) environments scale up, the need for seamless, high-speed, and backward compatible networking solutions becomes essential. To ensure efficient AI cluster interconnectivity, QSFP-DD (Quad Small Form-factor Pluggable – Double Density) modules provide 400G port compatibility, while QSFP112 transceivers optimize connectivity between AI servers operating at 100G or 200G per lane.

This article explores how to connect 400G ports with backward compatible QSFP-DD modules while leveraging QSFP112 transceivers for AI servers, ensuring scalable, low-latency, and high-bandwidth AI networking.

1. Understanding QSFP-DD and QSFP112 in AI Networking

🔹 What is QSFP-DD?

QSFP-DD (Quad Small Form-factor Pluggable – Double Density) is an advanced high-speed optical transceiver form factor that supports up to 400Gbps using 8 high-speed electrical lanes (each running at 50G or 100G).

✔ Supports 400G (8x50G PAM4) and 800G (8x100G PAM4)

✔ Backward compatible with standard QSFP transceivers (QSFP+, QSFP28, QSFP56, QSFP112)

✔ Scalable for AI workloads, cloud computing, and hyperscale data centers

✔ Ideal for spine-leaf fabric in AI networking architectures

🔹 What is QSFP112?

QSFP112 is a high-performance transceiver that supports 100G per lane, allowing single-lane 100G, 200G (2x100G), and 400G (4x100G) configurations.

✔ Uses 4x100G PAM4 lanes for efficient AI server-to-network interconnects

✔ Compatible with existing QSFP-DD 400G ports

✔ Reduces power consumption and enhances efficiency in AI clusters and compute nodes

🔹 By combining QSFP-DD and QSFP112, AI data centers can efficiently bridge 400G network switches with AI servers running at 100G or 200G speeds.

2. Connecting 400G Ports with Backward Compatible QSFP-DD Modules

🔹 QSFP-DD Backward Compatibility in AI Networks

One of the key benefits of QSFP-DD modules is their ability to support multiple transceiver types, allowing seamless integration with legacy and next-gen hardware.

| QSFP-DD Module | Compatible with | Use Case in AI Networks |

| QSFP-DD 400G DR4 | QSFP112 4x100G | Connects 400G leaf switches to AI servers at 100G per lane |

| QSFP-DD 400G FR4 | QSFP56 4x50G | Connects 400G ports to legacy 200G/100G AI compute nodes |

| QSFP-DD 400G SR8 | QSFP28 8x25G | Enables 400G to 100G backward compatible connections |

| QSFP-DD 400G to 4x100G Breakout | QSFP112 100G modules | AI cluster GPU interconnects with 100G lanes |

🔹 QSFP-DD’s backward compatibility ensures smooth transitions from existing 100G/200G AI server interconnects to future 400G and 800G networks.

3. Using QSFP112 Modules to Connect AI Servers

🔹 How QSFP112 Enhances AI Cluster Efficiency

- Connects AI servers running at 100G or 200G per lane to 400G switches

- Optimized for AI workloads, handling large-scale deep learning models and high-speed inferencing

- Reduces power consumption, making AI server farms more energy-efficient

- Supports direct connection to 400G spine switches without requiring costly hardware changes

🔹 Deployment Scenarios for QSFP112 in AI Networking

✔ AI Model Training Clusters – QSFP112 connects AI GPUs (e.g., NVIDIA H100/A100) at 100G per lane to 400G network fabric.

✔ Hyperscale AI Data Centers – QSFP112 enables flexible AI server-to-network connections with low latency and high throughput.

✔ Spine-Leaf AI Networking – QSFP112 supports 4x100G breakout configurations, enhancing scalability in AI-driven HPC environments.

4. AI Network Architecture with QSFP-DD and QSFP112

A high-speed, scalable AI network typically follows a Spine-Leaf topology. Here’s how QSFP-DD and QSFP112 fit into modern AI infrastructure:

🔹 AI Spine Layer: 400G High-Speed Backbone

- Uses QSFP-DD 400G transceivers to aggregate data from AI compute nodes

- Reduces bottlenecks by enabling high-throughput model training

- Supports multiple QSFP-DD 400G breakout connections to AI servers

🔹 AI Leaf Layer: AI Compute Node Connectivity

- Uses QSFP112 transceivers to connect AI servers at 100G or 200G per lane

- Supports efficient AI inference workloads by reducing latency

- Enables scalable interconnects between GPUs, TPUs, and AI accelerators

🔹 AI Server Layer: GPU-to-GPU Communication

- QSFP112 enables direct 100G GPU interconnects, ensuring fast AI model execution

- Supports NVMe-oF and RDMA over Converged Ethernet (RoCE) for ultra-fast data access

- Allows flexible AI cluster expansion while maintaining backward compatibility

5. Why QSFP-DD + QSFP112 is the Best Choice for AI Networking?

| Feature | QSFP-DD + QSFP112 Benefits for AI |

| 400G Backbone | Ensures high-speed AI cluster interconnectivity |

| Backward Compatible | Supports 100G, 200G, and 400G connections |

| Scalability | Enables AI infrastructure growth with high-density networking |

| Low Latency | Reduces training time for AI models |

| Energy Efficient | Optimized for low-power AI server deployments |

🔹 By leveraging QSFP-DD and QSFP112, AI data centers can achieve high-performance, future-ready networking while maintaining compatibility with legacy AI hardware.

Conclusion: Unlocking AI Potential with 400G QSFP-DD & QSFP112

As AI applications continue to scale in complexity, network performance is critical to ensuring fast, efficient, and scalable AI model training and inferencing.

The combination of QSFP-DD for 400G backbone connectivity and QSFP112 for AI server interconnects provides:

✅ High-speed AI networking with ultra-low latency

✅ Flexible backward compatibility for smooth AI infrastructure upgrades

✅ Scalable interconnects for AI-driven HPC and cloud environments 🚀 Upgrade your AI network with QSFP-DD and QSFP112 for next-generation performance!

About Optech Technology Co. Ltd

Optech Technology Co. Ltd was founded in 2001 in Taipei, Taiwan. The company was created with a sole purpose, to provide a wide and high quality portfolio of optical products to a very demanding and fast evolving market.

To respond to the permanent increase of IP traffic, Optech portfolio is constantly growing. Since the beginning, the company has always been up to date with the latest innovations on the market. Today, we are proud to deliver a large selection of 25G SFP28, 40G QSFP+, 100G QSFP28, 200G QSFP56, 400G QSFP-DD, 800G QSFP-DD and OSFP optical transceivers and cables.

Optech has a large portfolio of products which include optical transceivers, direct attach cables, active optical cables, loopback transceivers, media converters and fiber patch cords.

Through its large selection of optical products, that have a range of data speed from 155 Mbps to 800 Gbps and reach distances up to 120km, Optech products are suitable for various industries such as telecom, data centers as well as public and private networks.

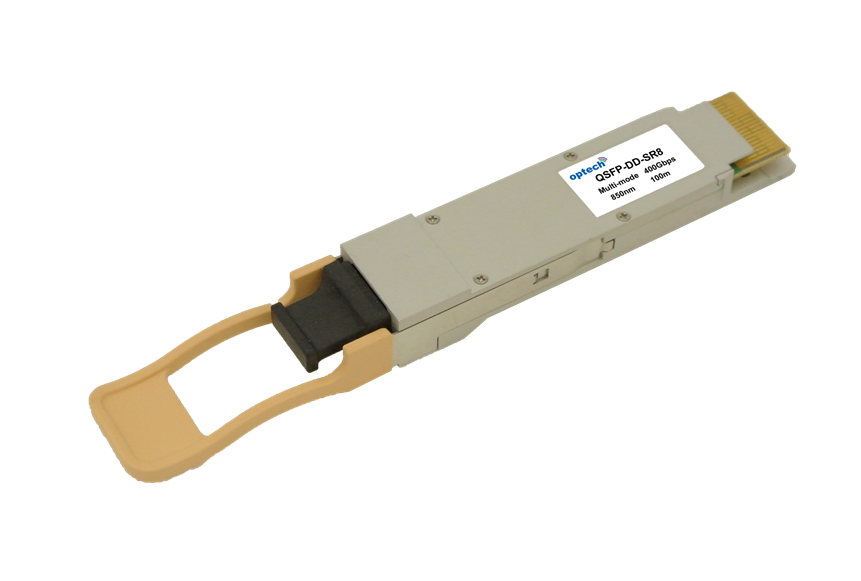

400G QSFP-DD Ordering Information

| Part Number | Optech Part Number | Features | Note |

| QSFPDD-400G-SR8 | OPDY-MX1-85-CB | 400Gb/s QSFP-DD SR8 multimode mode, MPO, 850nm, up to 100m | |

| QDD-400G-DR4 | OPDY-SX5-13-CB | 400Gb/s QSFP-DD DR4 single mode mode, MPO, 1310nm, up to 500m | 400G QSFP-DD DR4 to 4 x 100G QSFP28 DR1: An Easy Upgrade to a 400G Network |

| QDD-400G-DR4+ | OPDY-S02-13-CBS | 400Gb/s QSFP-DD DR4+ single mode mode, MPO, 1310nm, up to 2km | |

| QDD-400G-LR4 | OPDY-S10-13-CBE | 400Gb/s QSFP-DD LR4 single mode mode, LC, 1310nm, up to 10km | |

| QDD-400G-FR4 | OPDY-S02-13-CB | 400Gb/s QSFP-DD FR4 single mode mode, LC, 1310nm, up to 2km | |

| QDD-400G-SR4.2-BD | OPDY-WX5-85-CB | 400Gb/s QSFP-DD BIDI SR4.2 multimode mode, MPO, 850nm/910nm, up to 100m | QDD-400G-SR4.2-BD to 4X QSFP-100G-SR1.2 |

400G QSFP112 Ordering Information

| Part Number | Optech Part Number | Features | Note |

| 400G QSFP112 SR4 | OPEY-MT5-85-CB4 | 400Gb/s QSFP112 SR4 multimode, MPO, 850nm, up to 50m | Compatible with Nvidia MMA1Z00-NS400 |

| 400G QSFP112 DR4 | OPEY-SX5-13-CB4 | 400Gb/s QSFP112 DR4 singlemode, MPO, 1310nm, up to 500m |

For additional information about 400G QSFP-DD and 400G QSFP112 transceivers, or price inquiry, please contact us at sales@optech.com.tw